Unsurprisingly, teachers are very interested in the brain, and excited about the potential for psychology and neuroscience evidence to be used in improving what they do (see for example the responses to this survey). It’s a shame, then, that so little time in many teacher training routes has traditionally been given over to psychology and the science of learning. Especially if you trained five or more years ago (as I did), it seems to have been very rare to have received more than a cursory introduction to the subject… apart from one notable exception. However little psychology gets covered otherwise, one mandatory inclusion on many teacher training courses is constructivism — the idea that every individual constructs their own understanding and knowledge of the world, based on their own unique experiences. As a result, constructivist ideas are undoubtedly the most widely held ‘folk-psychological’ belief about learning amongst current teachers (See Torff, 1999, and Partick & Pintrich, 2001 for more on the content of teacher training and changes in trainee teachers’ beliefs about learning).

In this post I am going to argue that this state of affairs is dangerous. Importantly, though, the problem is not that constructivism is wrong. Constructivism works well as a theory of learning. The problem comes, however, when it is assumed that the theory of learning implies a particular pedagogical approach (‘educational constructivism’, or sometimes the closely related approach of ‘constructionism‘). Using evidence from neuroscience, I will try to show that there is a great deal of support for constructivism the learning theory, and a good deal less for constructivism the pedagogical approach.

Some of the misapplications of constructivism to pedagogy have been well documented before. Phillips (1995) described ‘The good, the bad, and the ugly‘ of constructivist pedagogy, and Hyslop-Margison & Strobel (2007) put a slightly more nuanced slant on the same topic. Much of their focus is on the potential for more radical constructivist views to lead to a relativistic approach to knowledge in which, because knowledge is individually constructed, there is ‘your knowledge’ and ‘my knowledge’ and little scope for any external validation.

My focus here, however, is on the extent to which the operations of the brain, especially during development, can help us to see why constructivism the learning theory does not entail the pedagogy. I’ll use the evidence underpinning the theory of ‘Neuroconstructivism’ (Mareschal et al., 2007), one of the most popular theories of developmental cognitive neuroscience, to help me with this.

Partial representations, and context-specificity

A central feature of neuroconstructivism is that the development of our brains and the storage of information in them is hugely context-dependent. At any one moment, activity in the brain is a reflection of the context that the organism finds itself in. Mareschal et al., provide four different levels where the activity of the brain is constrained by the context in which it occurs: neural, network, bodily and social. We’ll look at examples of context-specificity for each of these in turn in a minute. The important point, though, is that brain activity reflects the precise state of the organism at the time of the event, so any new piece of ‘learning’ will be encoded completely within the context of the learning experience, rather than reflecting any general underlying feature of it. In the terminology of the theory, we are only ever able to create ‘partial representations’; representations of the world which capture some, but not all of it. Partial representations are, by their very nature, completely context-dependent; that is, they reflect the features of the world (and of the brain) which were the case when the information was originally stored. Let’s then look at the four levels which can lead to the creation of such ‘partial’ representations. This section is heavily paraphrased from a previous description of the theory, but I reproduce it here for clarity.

- Neural context – ‘encellment’

The cellular neighbours of a neuron exert a large influence over its eventual function as a processor of information. The characteristics of its response and the way in which it connects and influences other neurons is in turn dependent on the type and amount of activity that the neuron itself receives. One classic example is that “cells that wire together fire together” — the more that cells communicate with each other, the more that their connections are strengthened, and the greater influence that a preceding cell exerts over the activity of subsequent cell.

However, the context-dependence of neural activity is not limited to the simple co-operative strengthening of connections. They can also compete. Hubel and Wiesel’s Nobel Prize-winning work on vision in cats involved looking at what happened if a part of the brain called the visual cortex was understimulated (because they had covered up the eye which sent information to it). They found that after 2-3 months, the nerve cells in the understimulated area began to switch functions and process information from the uncovered eye instead.

So what?

The activity of any one neuron is context-specific. This context is constantly changing due to a number of different factors: the ever-changing strengths of connections to potentially thousands of other inputs, competition (or co-operation) between neighbouring cells, or a progressive specialisation of the cell’s function. Therefore a signal from a neuron can only be interpreted as representing that cell’s response to a particular set of circumstances at that specific time; the neural context.

Another consequence of the reliance of each neuron’s activity on so many of its neighbours is that this means that any information that is encoded by the neuron is likely to be done so in a distributed fashion, across large groups of neurons. Such ‘distributed representations’, whilst more robust on the face of damage and brain changes, are also far more likely to be ‘partial representations’, relying as they do on numerous small contributions from different neural sources. They will never capture a concept or an idea in its entirety. Instead, they record a blurred snapshot of some of the key details approximating the concept, a partial representation.

2. Network context – ‘embrainment’

Just as individual neurons can be affected by the context in which they find themselves, so entire brain areas can co-operate, compete and change function as a result of their context within the brain as a whole. On a larger scale than that noticed by Hubel and Wiesel, Cohen et al (1997) found that in people who have been blind from an early age, ‘visual’ cortex begins to take on other functions entirely, such as touch when reading braille. Similarly, if you re-route visual information into a ferret auditory cortex (an area that would normally deal with processing sounds), the area will begin to respond to visual patterns instead (Sur and Leamey, 2001)! In less drastic fashion, maturation in the brain involves the progressive specialisation of many different brain areas, which gradually take over sole control of functions that previously relied on wider networks. Again this process can be categorised by competition, with one area gradually coming to exert a dominant influence over a particular kind of processing. Good examples of these sorts of processes have been found in the pre-frontal cortex (PFC) during adolescence, with different sections of that brain area becoming ‘responsible’ for particular functions. (see Dumontheil, 2016 for some examples).

So what?

Most formal education is taking place during periods of rapid brain development and maturation. Brain areas are progressively specialising and refining their functions, dependent on their relationship to other brain areas and input from the outside world. In this context, the distributed and partial representations that we build of the world are likely to be highly context-dependent, not only on the particular pattern of inputs, but also on the time and stage of development in which the information was learned.

3. Bodily context – ‘embodiment’

The brain does not sit in glorious isolation from the rest of the body. Some hard-wired nervous behaviours, such as reflexes, can in fact form the basis for the beginnings of brain development. Infants make spontaneous reaching movements from an early age and even new-born infants will move their limbs to block a light beam (Van der Meer et al, 1995). These kinds of behaviour initiate the beginnings of feedback mechanisms between the visual and motor areas of the brain and eventually allow for the development of complex visually-guided behaviour. Of equal importance, the design of some parts of the body can constrain brain development by ensuring that it does not need to develop certain skills; cricket ears are designed to respond preferentially to male phonotaxis (a sound made by rubbing one wing against the other). The cricket brain has no such specialisation for making this distinction, because the job has already been done (Thelen et al., 1996). In human cognition, examples of ‘embodiment’ might include state-dependent memory; the finding that we recall information more successfully in a similar ‘state’ to when we learned it, for example after exercise (Miles and Hardman, 1984) or even when drunk (Goodwin et al., 1969).

So what?

The development of our brains is constrained and uniquely differentiated by our nervous systems and by the body in which we find ourselves. Again, this is not just the case between individuals but also within individuals as they develop over time, and as they pass through the myriad different internal states which characterise our existence. The representations that we have of the world will reflect these changing embodiments, and will be ‘partial representations’ in that they are formed, and linked to, this embodied context. This therefore provides further scope for learning to be constrained by the situation (in the widest possible sense) in which the information was initially encountered. The Goodwin et al. paper is particularly relevant here, as it tested two outcomes; recognition and transfer. They found that, whilst recognition memory was not significantly affected by the a change in states between learning and recall, the ability to transfer the information was. Transfer, as a more complicated cognitive procedure than simple recall, is as a result even more susceptible to being restricted by the context in which it occurs.

4. Social context – ‘ensocialment’

The concept of ensocialment, the idea that the social context for any act of learning is crucial to shaping the learning that takes place, will be the most familiar of these four levels of analysis to educators. Vygotsky’s social constructivist theories are probably the most famous educational application of this sort of idea. People learn from others with more skills than them; with the more knowledgeable mentors using language and guidance to ‘scaffold’ the learner’s interactions with the world in the most productive manner. The concept of scaffolding; a supportive structure which is gradually removed as the learner gains in ability, is used to one degree or another by almost every major educational approach.

So what?

The type of scaffolding that is used may become inextricably linked to the solution that is produced, to the point where the ‘partial representation’ that we have of the solution is not accessed when the problem is framed differently. In one famous example, children working in Brazilian markets were able to demonstrate mathematical strategies on their stalls that they could not do in the classroom. Knowing how to do something in one context is no guarantee of being able to demonstrate the same skill in another.

Neuroconstructivism and constructivist learning

This brief survey has aimed to show how our experiences in the world can only ever lead to context-specific, partial representations of these events in our brains. It should hopefully be immediately clear that this evidence fits very well with many of the main ideas of constructivism as a theory of learning. The constructivist idea that meaning and knowledge are created by the individual in response to their specific experiences and ideas clearly fits very comfortably with the notion of partial representations. Given that each individual will have their own unique neural, network, bodily and social context at any one moment, even the same environmental stimulus is likely to lead to different partial representations of that stimulus in different people.

The neuroscientific evidence also complements the ‘constructive’ aspect of learning very nicely: the building up of ever more complex schemas through the gradual formation of multiple, overlapping partial representations of the world. It also appears to explain the focus of at least some constructivist theories (e.g. Piaget and Dewey) on the individual, highlighting the unique individual context in which any act of learning takes place. Indeed, the individual context is so important that, as we have seen, learning will often not transfer to contexts (even seemingly very similar tasks and environments) which do not share enough of the original features.

Neuroconstructivism and constructivist pedagogy

So far so good. Unfortunately, it has been common practice in education to go a step further, and to assume that the theory of learning implies/favours a particular pedagogical approach. Because constructivism emphasises the unique individual context in which learning takes place (and the individual’s role in the construction of that knowledge), so constructivist pedagogy places the onus on the individual student to construct their own understanding of the world. Teachers are therefore encouraged to design learning environments through which students are able to learn for themselves, sometimes facilitating the learning, but generally providing limited explicit guidance. Because constructivism emphasises the ‘active construction’ of knowledge, so constructivist pedagogy often places increased value on hands-on, ‘active’ or experiential learning (such as by experiment, project or solving real-world problems). This will, naturally, be entirely familiar to any teachers reading this post. If your teacher training was anything like mine, it will have been exactly how you were taught to teach.

Unfortunately, the leap from learning theory to pedagogy is not justified. Constructivism, as conceived purely as a theory of learning by Piaget, was not designed to be associated with any specific pedagogical approach. More importantly, the raft of neuroscientific evidence supporting the theory of ‘neuroconstructivism’ actually, in my view, provides strong evidence to suggest the opposite, that constructivist pedagogies are unlikely to be the most effective approaches to learning, at least until schemas are well developed.

Partial representations, and constructivist pedagogy

Let us return to ‘partial representations’, those context-dependent neural traces which constitute how the brain changes in response to experience. As we have seen, these traces are:

- partial – in that they reflect not the underlying structure of the knowledge but the whole neural, network, bodily and social context in which the knowledge was formed.

- distributed – in that they are made up of numerous small contributions from neurons distributed across brain areas. No one piece of information therefore resides in any one place, and any reactivation of that knowledge will be an approximate reconstruction, rather than a video-tape playback.

- context-dependent – as the knowledge contained in our partial representations often does not transfer to situations.

What relevance does this have for pedagogy? Well, let us take a constructivist-inspired pedagogical approach, which involves minimal explicit guidance being given to a student. Imagine a child over the course of a lesson discovering – through some trial and error and some careful teacher-facilitation in a thoughtfully structured learning environment – the formula for Pythagoras’ theorem. It sounds like a lovely educational experience for both student and teacher. Unfortunately, at the end of this process we have (time-consumingly) produced but a single partial representation, which is likely to be highly susceptible to context-dependency.

What the neuroscience of learning tells us is that in order to increase the likelihood of them being more easily accessed subsequently, students require multiple, overlapping partial representations, which are strengthened through repeated access. In his book ‘The Hidden Lives of Learners‘, Graham Nuthall wrote that students need to encounter information three or four times to learn it, and with the idea of multiple, overlapping partial representations we can see why such repeated exposure to information is so important. From this perspective, it is not the discovery of the strategy which is important for subsequent success, but the repeated exposure to it, and practice at accessing it, multiple times and in multiple different ways. There is therefore nothing wrong with the student learning about Pythagoras in the way described above, provided that they are subsequently afforded repeated opportunities to revisit and practise their new knowledge in different contexts. The problem is that, all too often, demonstration of a skill in the learned context is taken as indicating mastery of the skill in general, so lessons move on after a limited number of demonstrations of any new idea. Neuroconstructivism clearly shows that under such circumstances, we are unlikely to form enough overlapping partial distributions to be able to transfer our knowledge to a new context.

‘Neuroconstructivist pedagogy’: Multiple, overlapping, partial representations

What I have tried to show above is that our representations of the world, by their very nature, are only ever ‘partial’ representations. Given that, it makes sense for educators to work to create as many of them, and to strengthen them, wherever possible. So is there a particular pedagogical approach that is specifically favoured by the neuroscientific evidence? No. I should be clear I do not think that neuroscience findings can ever be used to directly conclude that any one pedagogical method is better than others. I do, however, think that the evidence underpinning neuroconstructivism provides some important considerations which different pedagogical approaches can all benefit from taking into account. These considerations are encapsulated in the statement:

Students require multiple, overlapping, partial representations of knowledge in order to transfer it to new contexts.

Given that having knowledge that we are able to transfer to a new context is pretty much the point of education itself, I think this is an important message. Again, however, I am not arguing here in favour any one pedagogical approach. I have my personal preferences, but I want to keep this separate from what I think the neuroscience evidence objectively tells us. The creation of multiple, overlapping, partial representations could conceivably be achieved through numerous pedagogical approaches, as long as students have the opportunity for repeated exposure to information and multiple chances to apply new knowledge to different contexts. It could be achieved through more explicit or direct instruction methods, through dialogic approaches, through well-structured co-operative or group strategies, even (if you have enough time spare in your curriculum) through repeated overlapping inquiry-style investigations such as the one described earlier. What teachers need to consider is, in their own context, which (combination of) teaching methods is most likely to produce repeated exposure to knowledge, and practice at applying it to different contexts. What this looks like in each classroom is a decision for each individual teacher.

So there we go. Some people are sceptical that neuroscience has anything to offer education. I agree that the neuroscientific evidence doesn’t provide support for any one concrete strategy of instruction (indeed, I don’t think it ever could). I think it does, however, create a simple and powerful question that I continue to ask myself as I evaluate how I teach. It is also a question that I wish I had been asked right at the start of my teaching career. So… how are you going to ensure that you form multiple, overlapping, partial representations?

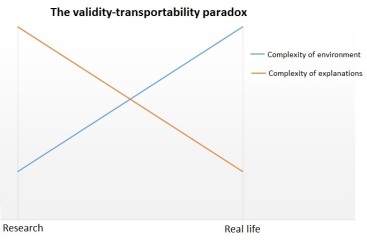

A paradox of attempting to apply ideas from research into the real world is that the simplified environments of scientific experiments allow for the formation of extremely complex explanations, whilst the application of those ideas into the more complicated real world often require that they are somewhat simplified. The ideal conditions required for creating validity, and those required for creating transportability (the easy transmission of an idea into the real world, to borrow a phrase from Jack Schneider’s 2014 book ‘From the ivory tower to the school house’), are almost completely opposing. This clearly creates a dilemma: how much erosion of validity do we accept in order to allow a theory to become transportable?

A paradox of attempting to apply ideas from research into the real world is that the simplified environments of scientific experiments allow for the formation of extremely complex explanations, whilst the application of those ideas into the more complicated real world often require that they are somewhat simplified. The ideal conditions required for creating validity, and those required for creating transportability (the easy transmission of an idea into the real world, to borrow a phrase from Jack Schneider’s 2014 book ‘From the ivory tower to the school house’), are almost completely opposing. This clearly creates a dilemma: how much erosion of validity do we accept in order to allow a theory to become transportable?

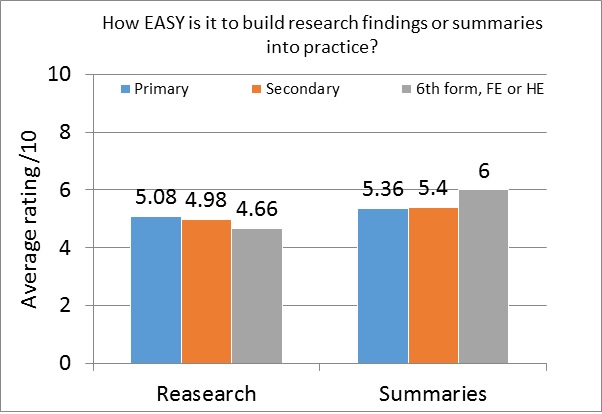

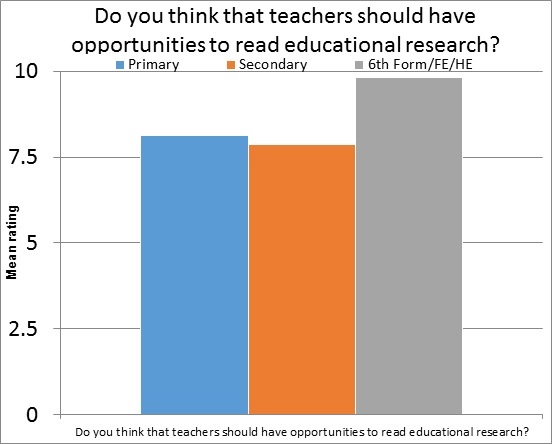

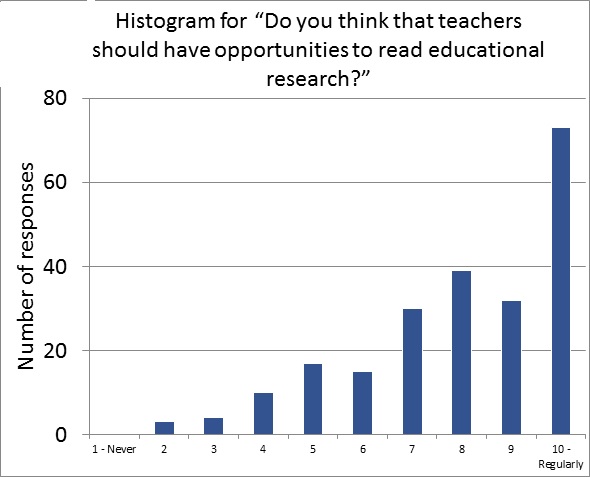

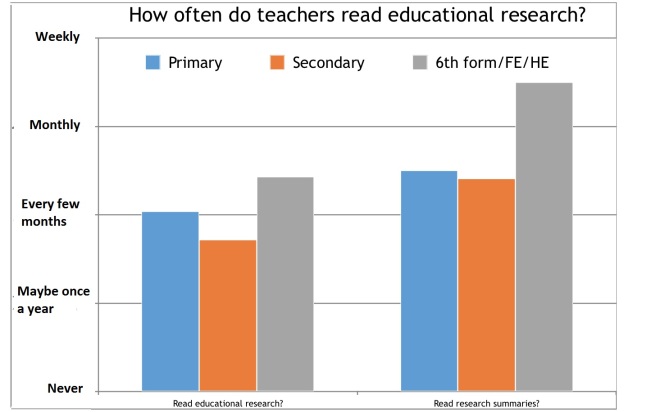

A total of 223 teachers completed the survey, for whose time I am hugely grateful. Perhaps unsurprisingly, given that I was a secondary school teacher, so were the majority of my contacts and so, subsequently, were most of the respondents (Primary = 26, Secondary = 191, Sixth Form/FE college = 2, University, HE = 4).

A total of 223 teachers completed the survey, for whose time I am hugely grateful. Perhaps unsurprisingly, given that I was a secondary school teacher, so were the majority of my contacts and so, subsequently, were most of the respondents (Primary = 26, Secondary = 191, Sixth Form/FE college = 2, University, HE = 4).

Perhaps some people read lots of research papers and research summaries and other people don’t read anything.

Perhaps some people read lots of research papers and research summaries and other people don’t read anything.